Iterative evaluative research for pharmacy software

For this project, I worked collaboratively with my cross-functional colleagues in an in-house work situation, leading and organizing a recurring and iterative evaluative testing cadence.

the scenario

A national pharmacy chain was creating a new software suite to replace the outdated one used by pharmacists and technicians across their pharmacies. This software would fulfill the end-to-end pharmacy management process, from patient records, to order fulfillment, to inventory.

The Design and Product teams needed confidence that the product they were creating was on the right track by uncovering usability problems, areas that delight and other findings in order to deliver the best possible product.

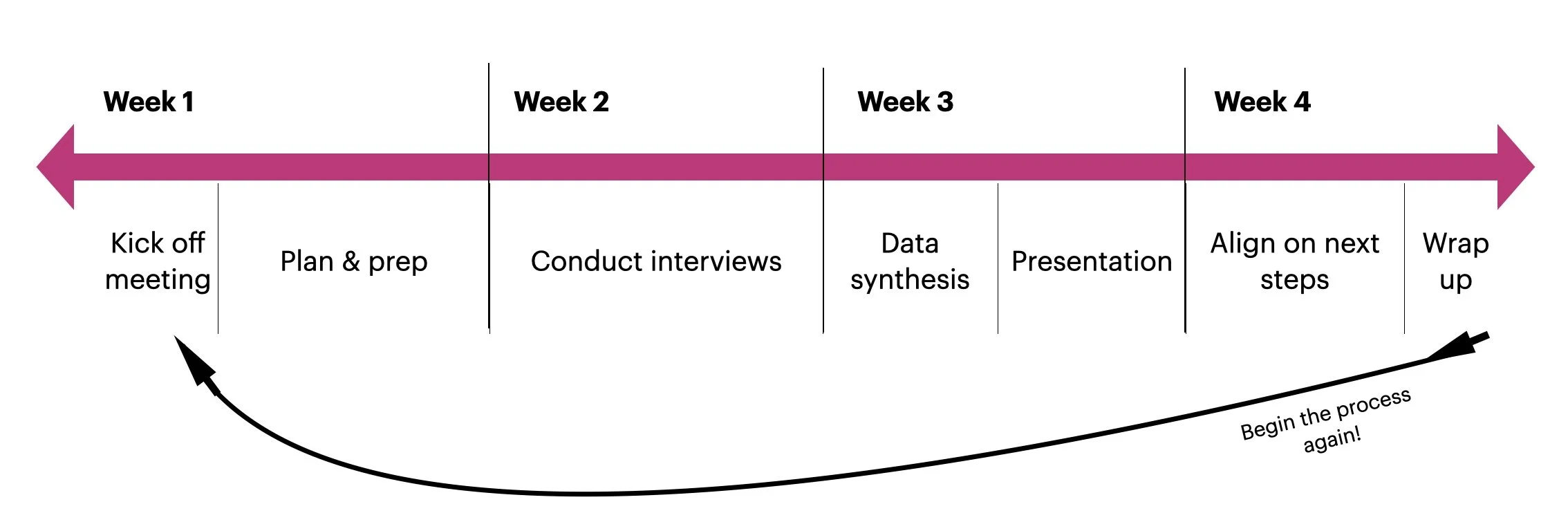

the iterative process

This is what the monthly process looked like. I trained the junior researcher to run the same process, so the team always had research coverage. So when I was doing interviews and/or data synthesis, the junior researcher was starting kick-off.

kick off & plan

The first step was to hold a kick off meeting with designers and product managers associated with the designs to be tested. During these kick offs, the designer walked us through the clickable prototype flow, allowing us to ask clarifying questions as needed.

In addition, I collected the following information from the team:

Which end-to-end task flows to test

Study goals: what are we trying to achieve by the end of this study?

Research questions:

What are the overarching questions we should have answered following the study?

What are the more detailed questions regarding different aspects of the prototype flow?

What assumptions does the team have about what they expect to hear?

These assumptions informed the moderator guide and provided a comparison to team member feedback received at the end of the study.

After the kick off, I went heads down to first write the research plan, which included:

Research goals

Overarching research questions

Study schedule

Links to prototype and documents related to study

Assumptions

I shared the draft with the team, requested feedback, then made revisions if necessary.

Next, I created the moderator guide:

including generative questions at the beginning (to continue building confidence that the prototypes were solving the right problems)

ensuring research goals would be achieved

asking questions I deemed necessary based on my knowledge of UX best practices,

incorporating the team’s questions (rephrasing as needed)

adding questions to collect quantitative data (to augment the qualitative data and create benchmarks to compare across tests)

Recruiting was done by my business operations colleague ahead of time, to ensure the junior researcher and I had 5 participants consisting of pharmacists and technicians every two weeks.

interviews

During the second week of the project, I conducted remote interviews via Microsoft Teams, covering the following:

Introduction

Describing what we’d cover during the hour

Assuring the participant that they wouldn’t hurt my feelings if they didn’t like something. That I was completely neutral as a researcher, so I wanted them to be honest

Learning a bit about who they were and their current experience

Walking them through the tasks of the prototype:

Getting first reactions to each screen

Think-aloud usability testing to complete each task

Feedback and likert ratings after each task. (e.g., “On a scale of 1 to 7, with 1 being extremely difficult and 7 being extremely easy, how easy did you find that task?”) If a task wasn’t easy, I uncovered what made it difficult and how they would like to improve it

At the end of each interview:

I asked likert questions about how much they liked the prototype and how easy in general they thought it was

I asked them how they would compare it with the current process

Then I had them fill out an System Usability Scale survey

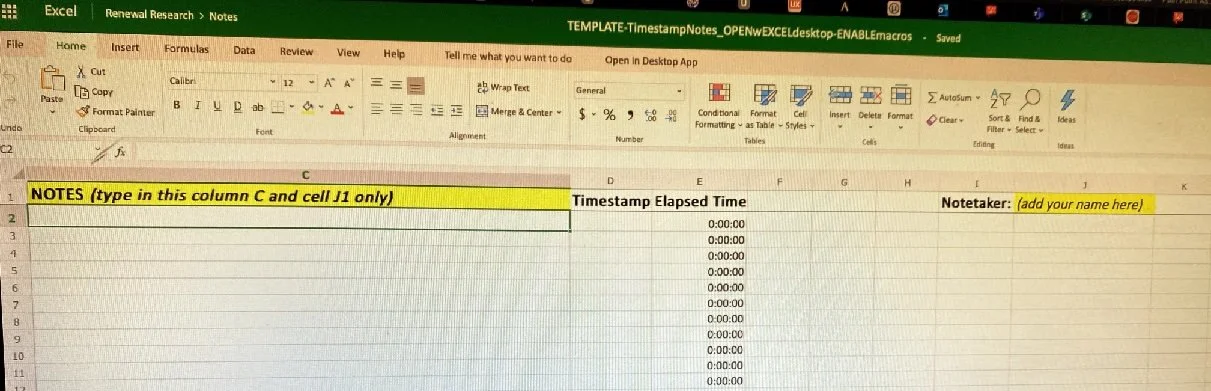

While all this was happening, designers and product managers were observing in the background, with their video off. I had a colleague take notes using the time-stamp template I created in Excel (training them ahead of time on note taking best practices, such as typing word for word as much as possible, and documenting participant behaviors):

Following each interview, I held a debrief with the colleagues who had observed the session, so we could discuss and align on the findings we saw come out of the interview, and mark them on a rainbow tracker. This helped create awareness of themes as they were emerging.

analysis & synthesis

Once the 5th interview and debrief of the round took place, I crafted an email to the project team that laid out high-level, pre-analysis findings.

After this, the analysis and synthesis was mostly already done due to the rainbow tracker being filled out. To ensure nothing was missing, I read through the time-stamped notes and added anything to the tracker if it was. Once I was sure all salient data was reflected, I then crafted the report.

My reports always included:

An executive summary

Project scenario and methodology

Findings were rated on a color-coded scaled in order of severity

On each page of the report reflecting the beginning of a new task, I added a visualization of the likert scale ratings, which always included confidence intervals to get a sense of the statistically significant range.

I would also show the prior iteration’s likert rating graph, to point out how the new score compares with the old.

At the end of each section reflecting a task, I shared recommendations for how to resolve issues for the user

At the end of the entire report, I added the SUS score on a graph, again with confidence intervals, and positioned the prior round’s score on the same graph for comparison

design & strategy decisions

Next I presented my report to the cross-functional team of design managers, designers and PMs, leaving space for questions.

Soon after the presentation, I met with the lead designer and we walked one by one through the findings and recommendations, starting with the most to least severe. We determined whether to use my recommendation, or revise it based on the designer’s knowledge. We documented each decision in a new deck.

Following this, the lead designer and I walked the product team through our decisions. The PMs used their business and technical knowledge to further refine the decisions if needed.

After this, the designer took the final decisions and incorporated them into the next iteration of the prototype.

post-study

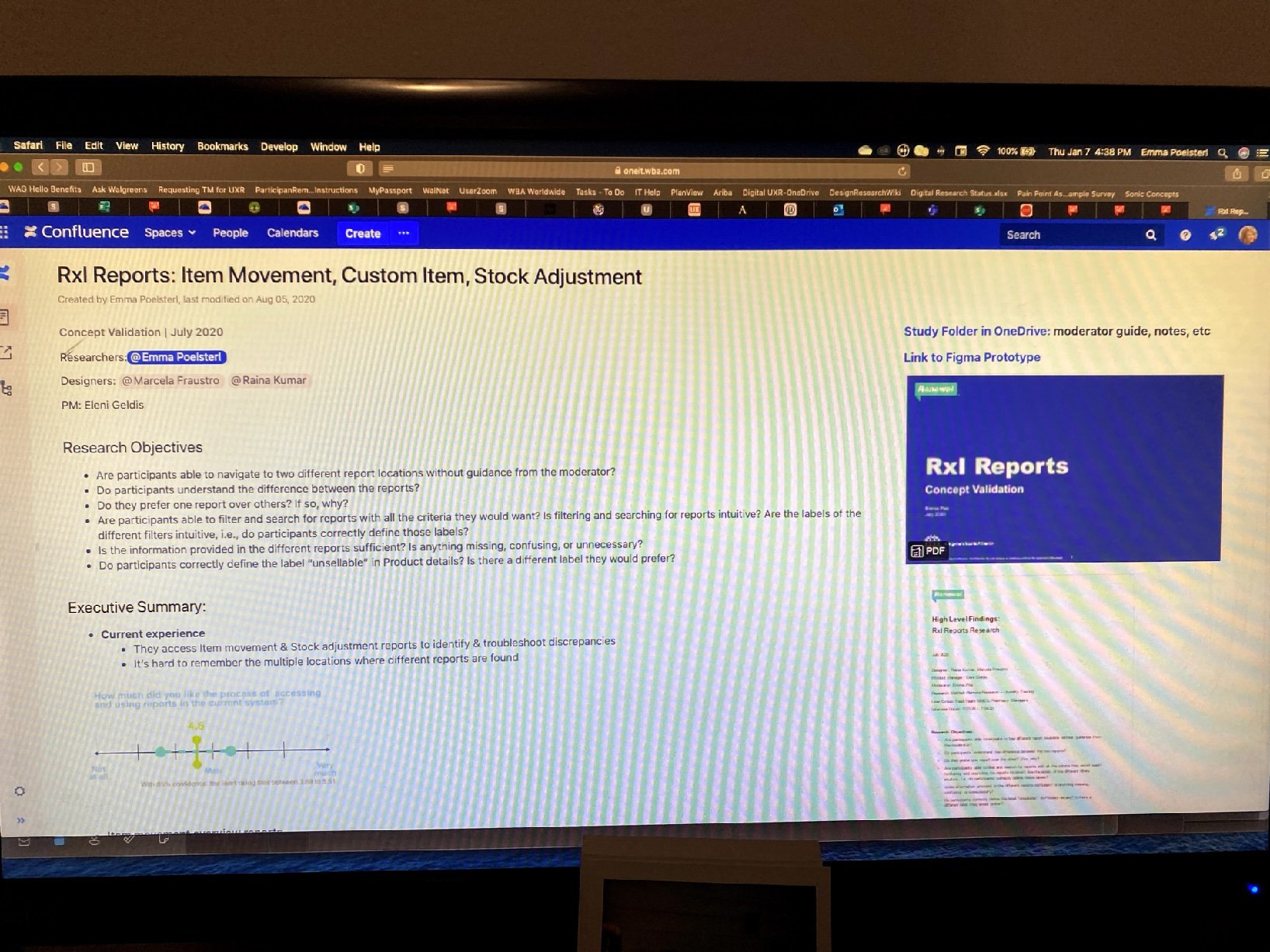

There were a few housekeeping activities I completed to completely wrap up the project. One was to create a page in our research team Confluence site I’d created. The page included the executive findings and final recommendations, likert rating and SUS survey graphs, links to the full report and other materials. This way, I could ensure that the entire cross-functional team had access to the data at any time.

Finally, I would email a link to the Confluence page, a PDF of the report, and a recording of the presentation to the team.

Then, I would begin the process over again!