Concept research for architecture software

This project showcases how I converted a challenging client that didn’t value or want research to one that paid extra for me to educate their engineering team on the value of research and how to build it into their product development process. My client was a manufacturer of building materials for architects, interior designers, and others who design and build buildings.

the scenario

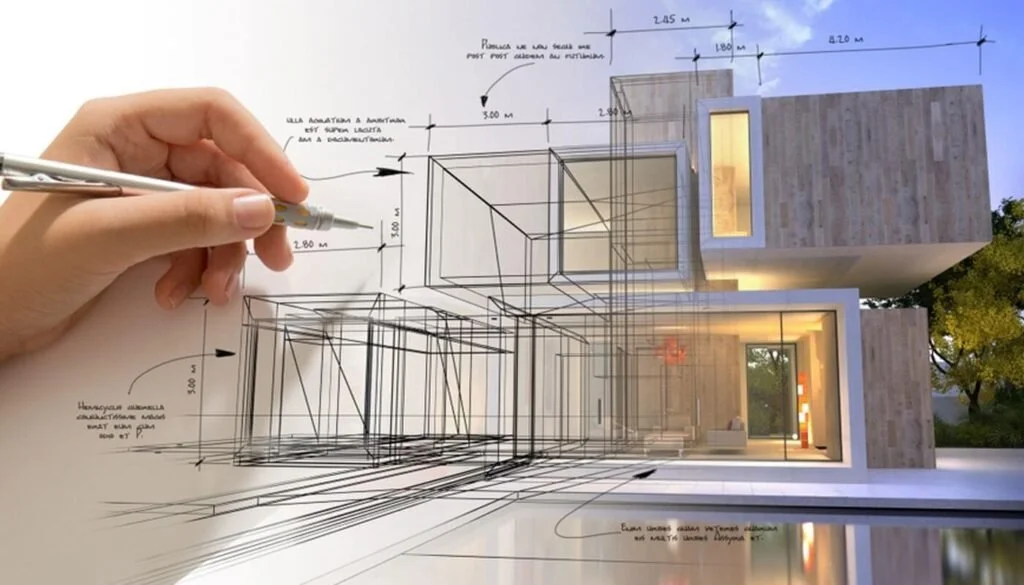

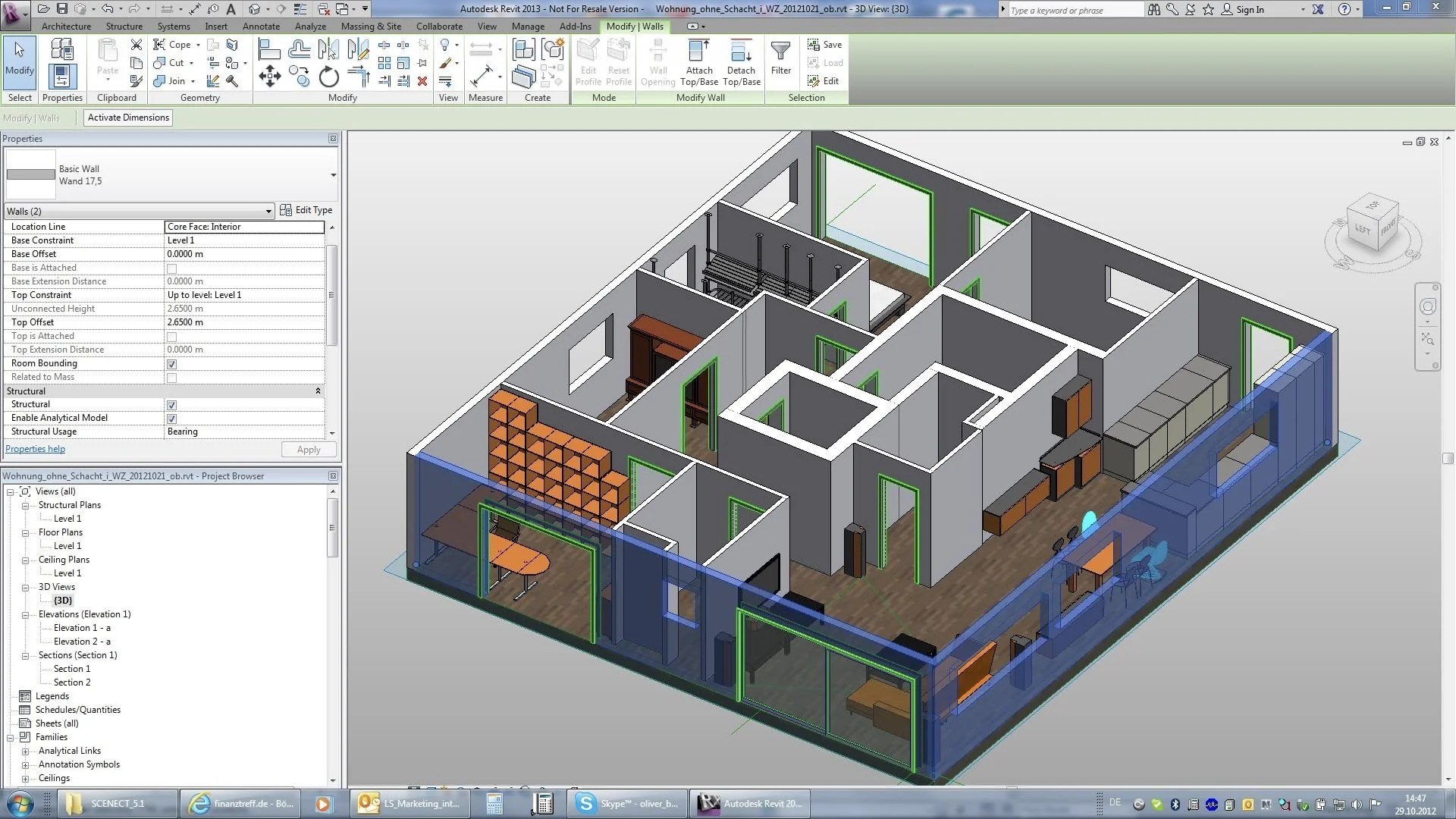

The client approached me wanting to create a plugin for the 4D building information modeling application, Revit. Revit is used by architects, interior designers, and others in the building design industry.

The business goals they were trying to achieve included:

trying to get more customers to specify their products in Revit models

to make it easier for customers to find Revit families (which are groups of products of the same style that can be installed together)

and to provide a comparable user experience to what their primary competitor offered.

The decision to pursue this idea came from output from a strategy research sprint conducted by a different researcher the prior year. Unfortunately, my team and I were not provided with this data and the former researcher was not available, so the team and I had to take the client’s word on their decision that came out of that strategy sprint.

kick-off challenge: client opposed to research!

My internal team comprised of a PM, a Designer, a Lead Engineer and myself as the Senior Researcher. We kicked off the project by holding a kick-off meeting where we discussed everything the project would cover, including research, design, strategy, and development. I led the discussion about research.

However, the client team felt strongly about their plugin idea and were averse to conducting additional research, thinking it wasn’t necessary.

I explained that research was part of our design process and in order for our designer to be confident in her designs, I insisted that we conduct AT LEAST one round of research before beginning development. Luckily, I was able to convince them, so, they reluctantly agreed to us conducting one round of evaluative research on a prototype our designer created based on their idea that came out of the prior year’s research sprint.

research approach

I landed upon conducting stakeholder interviews first, then I would do evaluative concept and high level usability testing on the prototype. These would be 1-hour remote interviews with the client’s customers that used Revit.

I believed that an evaluative method of concept testing would uncover high level usability issues and would be the best way to generate confidence in whether customers would use the tool, and if not, what changes to make to make it something worth using for them.

Since we were lacking data from the strategy sprint, my team felt very in the dark and wary about designing the tool the client envisioned. So I pushed for starting with stakeholder interviews first, to help us build more confidence and clarity around what we were designing and my research plan.

I built in generative questions to the beginning of the evaluative interview in order to understand the space and hope to verify that the design was solving the right problems and accomplishing the right jobs to be done, especially since no one on my internal team was part of the strategy research conducted the previous year.

stakeholder interviews

In order to understand the space before launching into the evaluative interviews with their customers, I conducted 1-hour remote stakeholder interviews with five of the client’s employees, consisting of four sales reps, and one sales manager.

The goals were:

to learn how stakeholders currently interact with the client’s customers related to Revit families

to uncover high and low points customers experience

and to understand their visions of what a successful Revit plugin could look like.

These findings played a large role in the resulting prototype designed for evaluative testing.

sprint 0 research: Planning

The recruiting criteria was very much based on my learning about the client’s customers at the kick-off and stakeholder interviews, then determining the right representative mix. I oversaw a third party agency as they conducted the recruiting.

14 remote interviews took place with an even mix of architects and designers that used Revit. All but two were customers, though most tended to specify other manufacturers in their models more often than the client.

The scenario I gave them was to pretend they’re designing a model in Revit and want to either decide on or add a ceiling panel using the plugin (I altered the journey of deciding vs adding based on how they said they currently used Revit in the generative portion of the interview). Then I gave them control of my screen.

Questions to answer via this research:

How do they currently plan and design structures using families and Revit along with other tools? What are their high and low points, and ideas for improvement?

What constitutes an MVP Assembly Solutions Plugin?

What will make this tool intuitive and valuable?

What would get users to download and use this plugin?

I also collected quantitative data during the interviews. I calculated success rates for each task, and likert ratings after viewing the whole prototype (intuitiveness and how much they liked it). After completing the interviews, participants took an SUS survey.

sprint 0 research: Results

During the generative portion of research, I learned that participants felt the Revit learning curve was way too high, and the experience too manual, cumbersome and time consuming. I also explored jobs to be done for the USG Revit plugin, and found they were to: save time, automate, simplify and streamline, (and help them make money.)

After going through the prototype, the vast majority said they would only use the tool if additional features were added, though a few said they would at least try it in its current state. Overall, they were not enthusiastic about the design in its current state, and it did not resonate. The additional features they desired closely tied in with the jobs to be done I had identified in the generative portion.

The quantitative data showed that while they liked the prototype to a certain extent and thought it was fairly intuitive, they gave it a C in the SUS survey. I used this quantitative data to augment the qualitative findings.

another challenge: the push for another round!

Following the research, the challenge my team was met with was to convince the client to forgo immediately beginning development of the prototype as they’d wanted, and convince them to do another round of evaluative research with a revised prototype that reflected the findings from the above research sprint.

Only one round of research had been approved and budgeted in by the client, but we strongly believed that we needed to do another round, since the first prototype did not strongly resonate with participants.

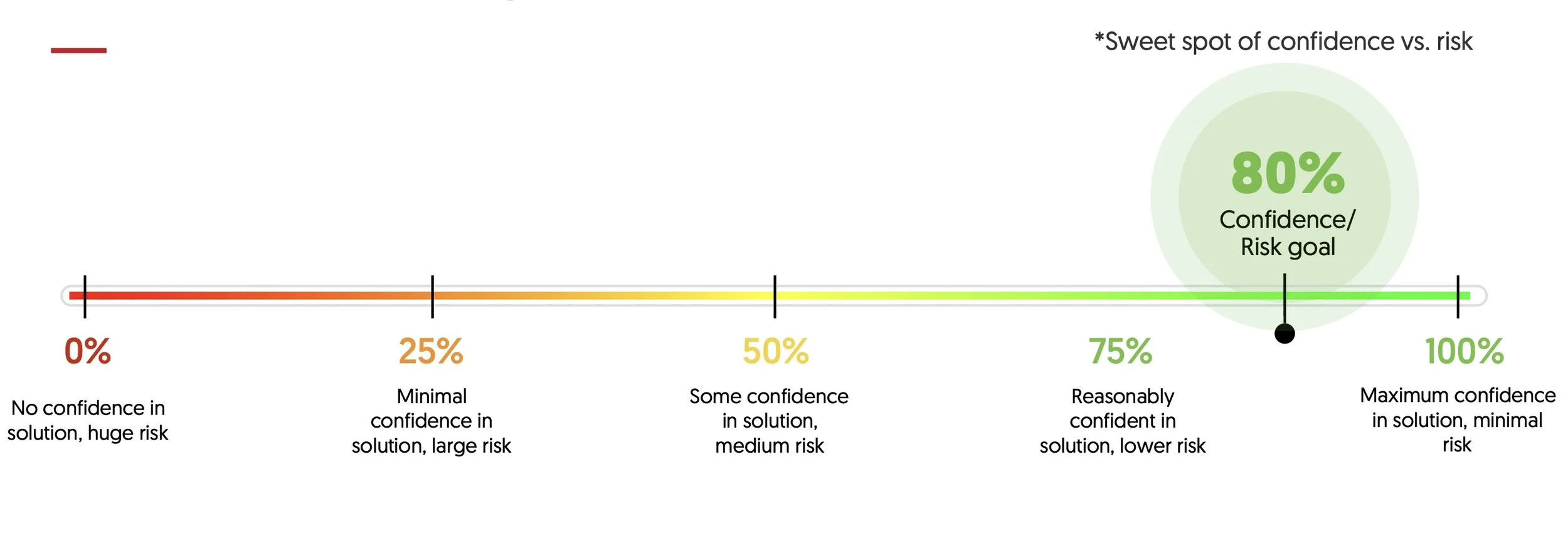

I walked my colleagues through the options that lay before us, and we compiled the advantages, disadvantages, and dependencies for each option. We included a confidence/risk scale to plot the different options as far as how we perceived them. We showed what the timeline would look like for each option. We included all this data in the report.

The designer had already created a draft prototype based on the findings, so we also included screenshots of that prototype in the report to show what we envisioned would be a more successful tool.

When presenting the readout, I walked the client through the options and explained the pros and cons of each, as well as where they fell on the confidence/risk scale. I walked them through the screenshots of the proposed prototype.

I strongly urged the client to consider a different direction for the tool, as I was certain based on findings that the design as it stood would be unsuccessful and a waste of money for USG.

outcome: Success!

Happily, the client agreed to my recommendation of adding an additional round of research and testing the new prototype, with the caveat that I do the recruiting myself in order to prevent spending more money and time on a third party recruiter again.

So, I had to pivot quickly, recruiting participants in real-time in between scheduled interviews using a variety of guerrilla methods, including through sales reps, prior participants, LinkedIn, and then one was referred by a a colleague at my consulting company.

sprint 0b research: Structure

I recruited 8 Architects and 5 Interior Designers. 8 were brand new participants, and 5 were repeat participants from the first research sprint. Interviews were 1-hour remote.

Participants were given the following scenario: “Pretend you’re designing a model in Revit and you decided on an acoustical canopy ceiling panel that you want to customize using a plugin.” I then gave participants control of my screen.

We wanted to answer the same overarching questions as the first round of research, which were:

How do new participants currently use Revit and families?

What constitutes an MVP Assembly Solutions Plugin?

What will make this tool intuitive and useful?

What would get users to download and use this plugin?

Quantitative methods were also identical to the first round.

sprint 0b research: Results

Much of the generative data from round 1 was identical, especially the pain point of Revit’s high learning curve.

Halfway through the testing, due to my finding that no one was discovering an important slide out Properties Panel by clicking on an arrow icon without my guidance, I advised my team to pivot, with the designer changing the design so that the panel’s default state was to be visible, with the ability to hide it. This made a big difference for the remainder of participants, where then everyone discovered it without my guidance.

After fully reviewing the prototype, ALL participants said they would want to use this tool, feeling it would streamline their process and save time. This in itself was a great measure of success that the design direction I had strategized had been correct, since NO ONE said they would definitely use the previous prototype.

Participants thought it was easier to use than Revit, which would enable less technical colleagues to use Revit, when currently they don’t due to the high learning curve.

Repeat participants said it was an improvement over the previous prototype due to customization and visualization features that had been added.

Many said they were most likely to use it for specialty products, and this would make them more likely to use specialty products in the first place, because it currently just takes too long to configure layouts from scratch in Revit.

Likert ratings were very slightly lower for intuitiveness and a bit higher for how much they liked it. Luckily, I knew what had to be done to improve intuitiveness based on evaluative findings. I also included success rate metrics for each task. Two tasks scored highly, but three had poor ratings. Again, through the findings, I knew how to direct the team to fix these issues. In the SUS survey, it received a C+, which was slightly higher than grade C in the previous round. I hypothesized that the second SUS score wasn’t higher due to the UX issues that emerged, but that the first prototype was lower because the concept didn’t resonate in general.

outcome after sprint 0b: Begin development!

Through this research, I was able to show that we were headed in the right direction with the revised concept, and I knew what minor usability improvements needed to be made before the design went into development.

When providing recommendations, I again employed the confidence/risk scale. I shared that in this round, I had higher confidence and lower anticipated risk because this version more closely fulfilled participant’s desired JTBD from the first sprint of: save time, automate, simplify and streamline, and help them make money.

Next my team and delivered a proposed roadmap and next steps for the MVP. Happily, the client agreed with our recommendations.

proof of success: A special invitation!

I’m proud to say that shortly following the wrapping up of the second round of research, the client project lead requested that I conduct a presentation for the engineering team, because they wanted to incorporate research into their process from now on. If you recall, originally this same project lead didn’t want to do any research.

The presentation covered:

The definition of UX Research

Why it’s valuable

Why it’s dangerous not to do it

How it can fit in in the product design and development process